LLM Assistance with MCP: Building smart APIs in 2025

Introduction

In 2025, the focus is on smart, modular backends that can interact seamlessly with AI assistants and LLMs. Instead of isolated REST APIs, modern systems increasingly rely on the Model Context Protocol (MCP) to expose APIs in a standardized, AI-friendly way.

In this article, you’ll learn how to:

- Build a backend with Python FastAPI

- Integrate an MCP server using Python FastMCP + StreamableHTTP

- Annotate and document your tools automatically

- Prepare your MCP server for chatbots or LLM-driven automation

What is MCP?

The Model Context Protocol (MCP) is an open standard, originally introduced by Anthropic, that defines how applications can expose tools, data, and context to large language models (LLMs). You can think of MCP as a universal interface for AI applications — similar to USB-C in the hardware world — that lets models discover and interact with backend services in a structured way.

Why MCP Matters for Modern Backends

- Modularity: MCP allows different data sources and tools to be integrated through a single, unified interface.

- Automation: LLMs can directly call the provided tools, enabling complex workflows without manual intervention.

- Flexibility: Standardized protocols make it easy to swap or combine tools and data sources.

- Safety & Control: MCP supports features like user confirmations for tool invocations, giving developers more control over AI actions.

Evolving Specification

MCP is actively developed and the specification is evolving quickly. A new version is released roughly every few months, which means developers should be prepared for breaking changes as the protocol matures. The current official specification was published in June 2025

MCP specification used in this post: 2025-06-18.

MCP Architecture

MCP follows a client-server architecture:

- MCP Host: Typically an LLM or AI agent that consumes tools or data

- MCP Server: Provides functions (tools), datasets (resources), or instructions (prompts) to the host

- Transport: Uses JSON-RPC 2.0 over Stdio or Streamable HTTP (replacement for SSE) transport

Example Interaction

- A user asks an AI model: “Show me last week’s orders.”

- The model identifies that it needs to fetch order data and invokes a tool like get_orders via MCP.

- The MCP server executes the function and returns the results to the model, which presents them to the user.

Example Project

We will build is a simple orders management system that allows you to retrieve order details by ID. We will use FastAPI to create the REST API and then wrap it in an MCP server using FastMCP.

Architecture Overview

Here’s the architecture of our example backend:

- Orders API: Provides data (e.g., orders) via REST

- MCP Server: Wraps the API and exposes tools for LLMs

- Inspector / LLM: Automatically discovers tools and interacts with them

Project structure

backend-mcp-demo/

├── api/

│ ├── pyproject.toml

│ └── api.py

├── mcp/

│ ├── pyproject.toml

│ └── mcp_server.py

├── docker-compose.yml

├── api.Dockerfile

└── mcp.Dockerfile

REST API

We start by creating a simple REST API using FastAPI:

[project]

name = "orders-api"

version = "0.1.0"

requires-python = ">=3.10"

dependencies = [

"fastapi",

"uvicorn[standard]"

]

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

[tool.hatch.build.targets.wheel]

packages = ["."]

from fastapi import FastAPI

app = FastAPI(

title="Orders API",

description="Demo-API for order management",

version="1.0.0"

)

orders = {

1: {"id": 1, "item": "Laptop", "status": "shipped"},

2: {"id": 2, "item": "Headphones", "status": "processing"}

}

@app.get("/orders/{order_id}")

def get_order(order_id: int):

return orders.get(order_id, {"error": "Order not found"})

FROM ghcr.io/astral-sh/uv:python3.11-bookworm-slim

WORKDIR /app

COPY ./api/pyproject.toml .

RUN uv sync

COPY ./api .

CMD ["uv", "run", "uvicorn", "api:app", "--host", "0.0.0.0", "--port", "8000"]

MCP Server

Next, we wrap the API in a Model Context Protocol server using FastMCP with StreamableHTTP:

[project]

name = "orders-mcp"

version = "0.1.0"

requires-python = ">=3.10"

dependencies = [

"fastmcp",

"httpx",

]

[build-system]

requires = ["hatchling"]

build-backend = "hatchling.build"

[tool.hatch.build.targets.wheel]

packages = ["."]

import os

import httpx

from fastmcp import FastMCP

API_URL = os.getenv("API_URL", "http://api:8000")

app = FastMCP("Orders MCP Server")

@app.tool()

async def get_order(order_id: int) -> dict:

"""Fetch an order by ID from the Orders API"""

async with httpx.AsyncClient() as client:

r = await client.get(f"{API_URL}/orders/{order_id}")

return r.json()

if __name__ == "__main__":

# Start MCP over HTTP using StreamableHTTP transport

app.run("http", host="0.0.0.0", port=3000)

FROM ghcr.io/astral-sh/uv:python3.11-bookworm-slim

WORKDIR /app

COPY ./mcp/pyproject.toml .

RUN uv sync --frozen

COPY ./mcp .

CMD ["uv", "run", "python", "mcp_server.py"]

Docker Deployment

Create a docker compose file for the API and MCP server:

services:

api:

build:

context: .

dockerfile: api.Dockerfile

ports:

- "8000:8000"

mcp-server:

build:

context: .

dockerfile: mcp.Dockerfile

depends_on:

- api

environment:

- API_URL=http://api:8000

ports:

- "3000:3000"

Let's start the API and MCP server using Docker Compose:

docker-compose up -d

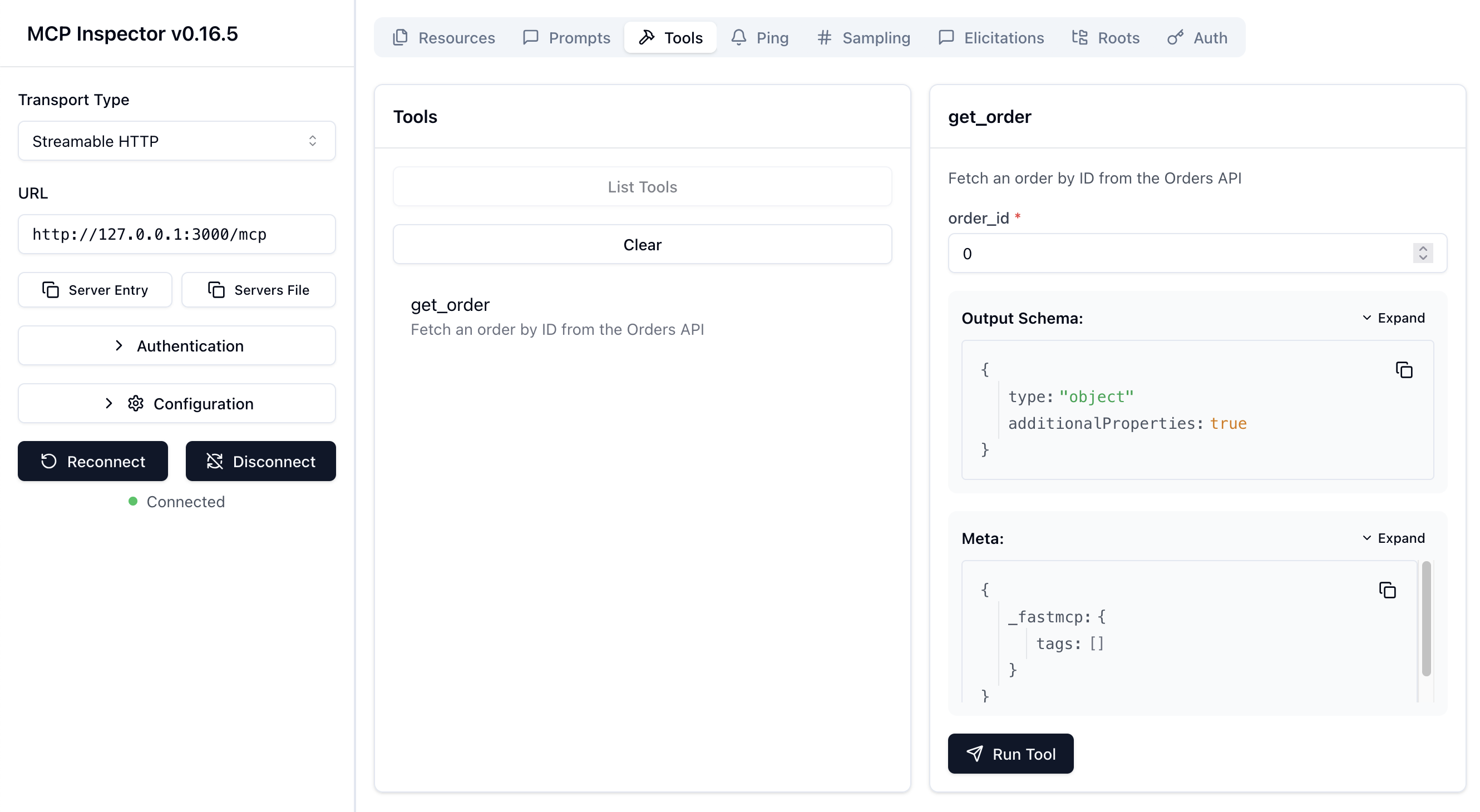

MCP Inspector

The @modelcontextprotocol/inspector is a powerful tool for testing and debugging MCP servers. It provides a web-based interface to interact with MCP servers, discover available tools, and test their functionality. Let's install and run it to test the MCP server:

npm install -g @modelcontextprotocol/inspector

npx @modelcontextprotocol/inspector --transport http --server http://127.0.0.1:3000/mcp

More information about the MCP Inspector can be found in the official documentation.

If everything is set up correctly, you should see the MCP Inspector interface in your browser at

http://localhost:6274. Click on Connect and explore the available tools, test them, and see

how they interact with the MCP server.

Integrating MCP into Chatbots

Once the MCP server is running and tested, the next step is the integration. Chatbots and LLMs can simply call the

get_order tool using Streamable HTTP transport.